Transhumanism might be described as the technology of advanced individual enhancement. While it includes physical modifications (diamondoid teeth, self-styling hair, autocleaning ears, nanotube bones, lipid metabolizers, polymer muscles), most of the interest in the technology focuses on the integration of brains and computers — especially brains and networks. Sample transhumanist apps could include cell phone implants (which would allow virtual telepathy), memory backups and augmenters, thought recorders, reflex accelerators, collaborative consciousness (whiteboarding in the brain), and a very long list of thought-controlled actuators. Ultimately, the technology could extend to the uploading and downloading of entire minds in and out of host bodies, providing a self-consciousness that, theoretically, would have no definitive nor necessary end. That is, immortality, of a sort.He also cautions against the blanket condemnation of enhancement technologies, complaining about the impracticality of enforcing such a policy of restraint:

Still, it's not clear that boycotting neurotech will be a realistic option. When the people around you — competitors, colleagues, partners — can run Google searches in their brains during conversations; or read documents upside down on a desk 30 feet away; or remember exactly who said what, when and where; or coordinate meeting tactics telepathically; or work forever without sleep; or control every device on a production line with thought alone, your only probable alternative is to join them or retire. No corporation could ignore the competitive potential of a neurotech-enhanced workforce for long.

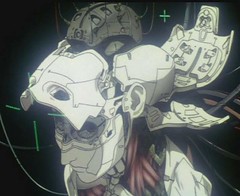

And as a CIO, Hapgood also offers some practical solutions to mind violations of the kind depicted in Ghost in the Shell:

One possible approach to neurosecurity might be to implant a public-key infrastructure in our brains so that every neural region can sign and authenticate requests and replies from any other region. A second might be maintaining a master list of approved mental activities and blocking any mental operations not on that list. (Concerns about whether the list itself was corrupted might be addressed by refreshing the list constantly from implanted and presumably unhackable ROM chips.) It might also be necessary to outsource significant portions of our neural processing to highly secure computing sites. In theory, such measures might improve on the neurosecurity system imposed on us by evolution, making us less vulnerable to catchy tunes and empty political slogans.Definitely give this article a read.

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.